Today, we’ll create a program that can virtually instantly identify persons in a video feed (it will depend on how fast your CPU is.)

We saw how to use deep learning to identify items in a picture in my post on AI person detection using the darknet. A YOLOv3 neural network that had been trained to recognize and classify items in 80 categories was employed for this (person, car, truck, potted plant, giraffe, …).

The most advanced AI person detection algorithm is called YOLOv3: When tested on strong GPUs, compared to other algorithms, it is extremely accurate and quick.

However, it takes 200 ms even with a GeForce GTX 1080 Ti to recognize objects in a single image. And to process video streams at 24 images per second for real-time detection, one must reduce processing time to 40 ms per image or less.

Powerful deep learning workstations are also costly and energy-intensive. Therefore, they are undoubtedly insufficient if your goal is to create a simple home security system that is always active.

We won’t use deep learning in this situation. Instead, we will make use of straightforward machine learning algorithms that can be assessed very quickly on a CPU.

Building AI Person Detection and counting system for this project using a webcam or your video or photographs will be the main focus of this article, however there is a lot more for you to learn from it too. This project will help you master the ideas and become an authority in the field of data science. It is a deep learning project on computer vision at the intermediate level. Let’s construct a fascinating project.

How To Use AI Person Detection?

It is up to you how to use an output once you have one. Various details, like the detection criteria, would be determined by your use case. The best AI person detection tool in one circumstance might not be effective in another.

Recent developments in training the model and multilayer deep processing have made machines proficient at handling these jobs. Examples of consumer-facing examples include Deepface and DeepID, two of the early pioneers of the feature extraction and comparison technique, which have become so effective: For example, people now use technology to open their phones and tablets.

A significant development in CV was cloud processing, which gave all developers access to potent tools. New CV platforms, however, make it simple to access open API platforms, providing developers even more freedom. Deep learning applications can now be created for edge devices.

Core computer vision services, including AI person detection, can be used to process and analyze your images without the requirement for a computer vision specialist or cloud connectivity. Today, complex computer vision applications can be developed and deployed by businesses of all sizes on low-resource, low-power hardware.

How does HOG function?

People can already be seen in a video broadcast, so you can stop right here if you want to.

But if you’re curious about how the algorithm functions, keep reading; I’ve made an effort to describe it clearly.

In the original publication by Dalal and Triggs, the performance of the HOG algorithm is demonstrated. However, I had to delve into this paper’s references, particularly this ground-breaking article on hand gesture detection, to truly comprehend how the algorithm functions.

You might also check out this lovely blog post.

Understanding what a gradient and a histogram are first is important in order to comprehend how HOG (Histograms of Oriented Gradients) functions.

What is a histogram?

A histogram is a representation of statistical data that makes use of rectangles to illustrate the frequency of data items in a series of equal-sized numerical intervals. The dependent variable is represented along the vertical axis and the independent variable is plotted along the horizontal axis in the most typical form of a histogram.

What Is A Gradient?

The derivative, also known as the rate of change of a function, is referred to as the gradient. It is a vector (a moving direction). points in the direction of a function’s greatest rise (intuition on why) Does zero exist at a local minimum or maximum? (because there is no single direction of increase)

AI Person Detection with OpenCV Project

- Prerequisites Of The Project

You must be familiar with the OpenCV library and the fundamentals of Python programming to complete the Python project. The following libraries will be required:

- OpenCV: a potent machine learning library

- Imutils: for processing images

- Numpy: for scientific computing and to store the image.

- Argparse: for command-line input.

Run the following code in your terminal to install the necessary library.

- Oriented Gradient Descriptor – Histogram

HOG is a feature descriptor for AI person detection in computer vision and image processing. Fortunately, OpenCV has already been developed as an effective method to combine the HOG Descriptor algorithm with Support Vector Machine, or SVM, making this one of the most widely used AI person detection algorithms.

Steps To Build Human Detection Project

- Importing the libraries:

- import cv2

- import imutils

- import numpy as np

- import argparse

- Creating the model for detecting Humans:

As previously indicated, we’ll employ the OpenCV-built HOGDescriptor with SVM. The code below will accomplish this:

- HOGCV = cv2.HOGDescriptor()

- HOGCV.setSVMDetector(cv2.HOGDescriptor_getDefaultPeopleDetector())

The pre-trained OpenCV model for Human detection is called by cv2.HOGDescriptor getDefaultPeopleDetector(), and we then pass it to our support vector machine.

- Detect() Procedure

The magic will actually happen here.

A video is a moving image created by combining a series of still photos. We refer to these pictures as frames. In general, we will be able to identify the individual in the picture. And demonstrate it one after the other so that it resembles a video.

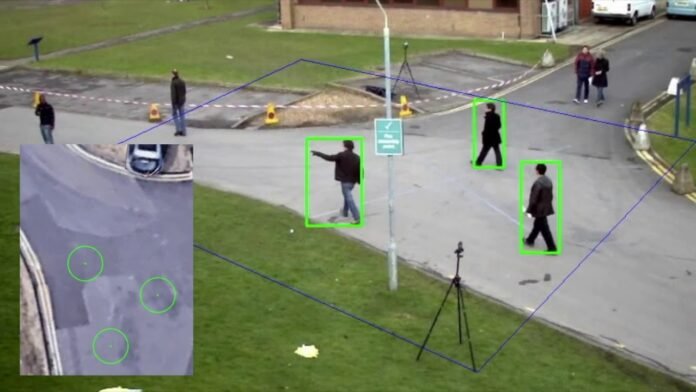

Exactly that is what our Detect() function will accomplish. To find a person in a frame, you need a frame. Create a box around a person, present the frame, and then bring it back with the person enclosed in a green box.

def detect(frame):

bounding_box_cordinates, weights = HOGCV.detectMultiScale(frame, winStride = (4, 4), padding = (8, 8), scale = 1.03)

person = 1

for x,y,w,h in bounding_box_cordinates:

cv2.rectangle(frame, (x,y), (x+w,y+h), (0,255,0), 2)

cv2.putText(frame, f’person {person}’, (x,y), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0,0,255), 1)

person += 1

cv2.putText(frame, ‘Status : Detecting ‘, (40,40), cv2.FONT_HERSHEY_DUPLEX, 0.8, (255,0,0), 2)

cv2.putText(frame, f’Total Persons : {person-1}’, (40,70), cv2.FONT_HERSHEY_DUPLEX, 0.8, (255,0,0), 2)

cv2.imshow(‘output’, frame)

return frame

detectMultiScale will take care of everything (). It gives back 2 tuples.

- List of a person’s bounding box coordinates.

The format of coordinates is X, Y, W, H.

where the box’s beginning coordinates are x, y, and its width and height are, respectively, w, h.

- Confidence Value that it is a person.

We currently have a detection technique. Create a detector, please.

- Method HumanDetector()

Two methods exist for obtaining video.

- Webcam

- Location of the file

As part of our deep learning study, we can also take pictures. In order to work, our technique will first check to see if a path has been provided, then look for any videos or images there. Otherwise, the webCam will be activated.

def humanDetector(args):

image_path = args[“image”]

video_path = args[‘video’]

if str(args[“camera”]) == ‘true’ : camera = True

else : camera = False

writer = None

if args[‘output’] is not None and image_path is None:

writer = cv2.VideoWriter(args[‘output’],cv2.VideoWriter_fourcc(*’MJPG’), 10, (600,600))

if camera:

print(‘[INFO] Opening Web Cam.’)

detectByCamera(ouput_path,writer)

elif video_path is not None:

print(‘[INFO] Opening Video from path.’)

detectByPathVideo(video_path, writer)

elif image_path is not None:

print(‘[INFO] Opening Image from path.’)

detectByPathImage(image_path, args[‘output’])

- DetectByCamera() Technique

def detectByCamera(writer):

video = cv2.VideoCapture(0)

print(‘Detecting people…’)

while True:

check, frame = video.read()

frame = detect(frame)

if the writer is not None:

writer.write(frame)

key = cv2.waitKey(1)

if key == ord(‘q’):

break

video.release()

cv2.destroyAllWindows()

cv2.VideoCapture(0) In this function, supplying 0 indicates that we wish to record using a webcam. video.read() reads each frame individually. If the check was successful in reading a frame, it returns True; otherwise, it returns False.

Now we will call the detect() method for each frame. The frame is then written to our output file.

- DetectByPathVideo() Technique

With the exception of providing a path to the video, this method is quite similar to the previous one. First, we determine whether the video on the specified route was discovered or not.

def detectByPathVideo(path, writer):

video = cv2.VideoCapture(path)

check, frame = video.read()

if check == False:

print(‘Video Not Found. Please Enter a Valid Path (Full path of Video Should be Provided).’)

return

print(‘Detecting people…’)

while video.isOpened():

#check is True if reading was successful

check, frame = video.read()

if check:

frame = imutils.resize(frame , width=min(800,frame.shape[1]))

frame = detect(frame)

if the writer is not None:

writer.write(frame)

key = cv2.waitKey(1)

if key== ord(‘q’):

break

else:

break

video.release()

cv2.destroyAllWindows()

def detectByCamera(writer):

video = cv2.VideoCapture(0)

print(‘Detecting people…’)

while True:

check, frame = video.read()

frame = detect(frame)

if the writer is not None:

writer.write(frame)

key = cv2.waitKey(1)

if key == ord(‘q’):

break

video.release()

cv2.destroyAllWindows()

The implementation is comparable to the previous function, with the exception that we will verify whether or not each frame was properly read. When the frame is not read at the conclusion, the loop is ended.

- DetectByPathimage() Technique

If a person needs to be identified from an image, this technique is utilized.

def detectByPathImage(path, output_path):

image = cv2.imread(path)

image = imutils.resize(image, width = min(800, image.shape[1]))

result_image = detect(image)

if output_path is not None:

cv2.imwrite(output_path, result_image)

cv2.waitKey(0)

cv2.destroyAllWindows()

- Argparse() procedure

The arguments given through your terminal to our script are simply parsed by the function argparse() and returned as a dictionary. The Parser will contain three arguments:

- Image: The location of the image file on your computer

- Video: The location of the system’s associated video file

- Camera: A variable that will run the cameraDetect() method if it is set to “true.”

def argsParser():

arg_parse = argparse.ArgumentParser()

arg_parse.add_argument(“-v”, “–video”, default=None, help=”path to Video File “)

arg_parse.add_argument(“-i”, “–image”, default=None, help=”path to Image File “)

arg_parse.add_argument(“-c”, “–camera”, default=False, help=”Set true if you want to use the camera.”)

arg_parse.add_argument(“-o”, “–output”, type=str, help=”path to optional output video file”)

args = vars(arg_parse.parse_args())

return args

- Main Purpose

The completion of our project is now in sight.

if __name__ == “__main__”:

HOGCV = cv2.HOGDescriptor()

HOGCV.setSVMDetector(cv2.HOGDescriptor_getDefaultPeopleDetector())

args = argsParser()

humanDetector(args)

Initiate The Human Detection Project

Please execute the commands listed below in accordance with the criteria to conduct the human identification deep learning project.

- To input a video file:

python main.py -v ‘Path_to_video’

- To input an image file:

python main.py -i ‘Path_to-image’

- How to operate a camera

python main.py -c True

- To keep the results:

Python main.py -c True -o ‘file_name’

Conclusion

Through this deep learning experiment, we have learned how to efficiently construct a people counter using HOG and OpenCV. We created a project that allows you to input data via live camera, video, or image. This AI person detection project, which is on the intermediate level, will unquestionably aid you in studying Python and deep learning libraries.

If you want to know more about the human detection process, feel free to connect with Team Folio3. Our experts will be delighted to help you out at any time regarding anything and everything about AI.